The University of California, Los Angeles (UCLA) has abandoned its plan to implement facial recognition technology into its campus surveillance systems.

UCLA administrative vice chancellor Michael Beck wrote in a letter to Fight for the Future, a digital privacy advocacy group, that the school would abandon its plans due to backlash from its student body, reports USA Today. Students voiced concerns during a 30-day comment period in June 2019 and at a town hall meeting this January.

“We have determined that the potential benefits are limited and are vastly outweighed by the concerns of the campus community,” Beck wrote. The university had not yet identified a specific software or made any significant plans to deploy it, he added.

Fight for the Future launched its own campaign against the consideration earlier this year after conducting an experiment, according to Inside Higher Ed. The group ran more than 400 pictures of UCLA athletes and staff through Amazon’s facial recognition software and found 58 people were incorrectly matched with photos in a mugshot database. The majority of the false positives were minorities.

Fight for the Future contacted the university informing them they planned to release the results to a news outlet. Within 24 hours, UCLA released its statement to the group, according to Evan Greer, the group’s deputy director.

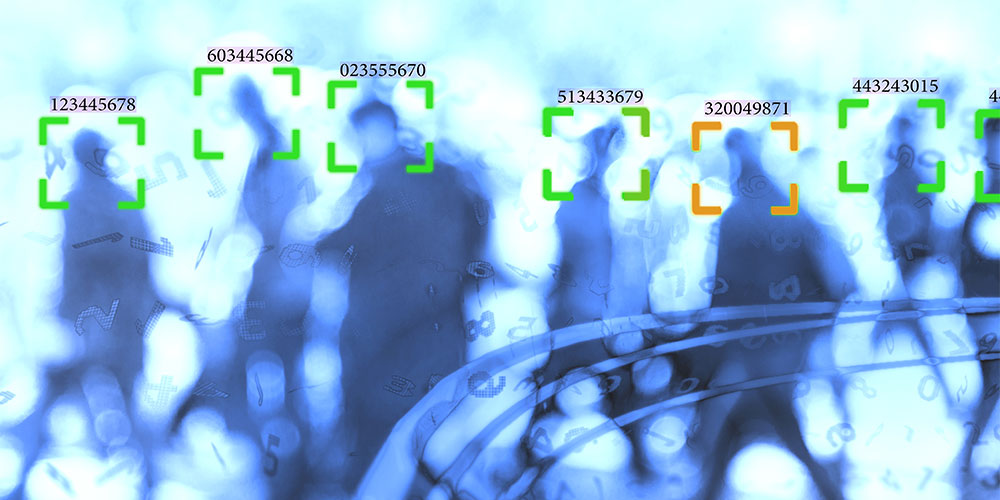

Facial recognition technology has been a hotly debated topic for some time now. Earlier this year, Fight for the Future launched a nationwide effort to ban facial recognition technology from all U.S. university and college campuses.

“Facial recognition surveillance spreading to college campuses would put students, faculty, and community members at risk. This type of invasive technology poses a profound threat to our basic liberties, civil rights, and academic freedom,” said Greer. “Schools that are already using this technology are conducting unethical experiments on their students. Students and staff have a right to know if their administrations are planning to implement biometric surveillance on campus.”

Several months earlier, the Security Industry Association (SIA) issued a letter to Congress outlining its concerns regarding blanket bans on public-sector uses of facial recognition technology.

The letter encourages the federal government to collaborate with all stakeholders to address concerns about the use of the technology and recommends federal leaders provide a consistent set of rules across the country.

In May 2019, San Francisco became the first U.S. city to prohibit local agencies from using the technology. Under the new law, any city department that wants to use surveillance technology must first get approval from the Board of Supervisors.

“Recent calls for bans on facial recognition technology are based on a misleading picture of how the technology works and is used today,” stated SIA CEO Don Erickson. “Facial recognition technology has benefited Americans in many ways, such as helping to fight human trafficking, thwart identity thieves and improve passenger facilitation at airports and enhance aviation security.”

The software is being used by numerous municipal police departments and airports, as well as at least one public school district.

In 2016, the Center on Privacy and Technology at Georgetown University Law Center determined that half of American adults are part of a law enforcement facial recognition network.